One of the challenges of working in training is keeping up with the changes in the field,

especially advances in technology. To help you make sense of current and upcoming training technologies, Trainingmagazine offers an assessment. This print version describes 10 technologies and assesses their prospects for the next five years. In the print version of the article, we describe the technology and assess its prospects for the next 5 years. In an online version of the article, we also assess the “newness” of the technology (as some technologies being promoted as “new” might actually be old ones with new names) and provides examples of use.

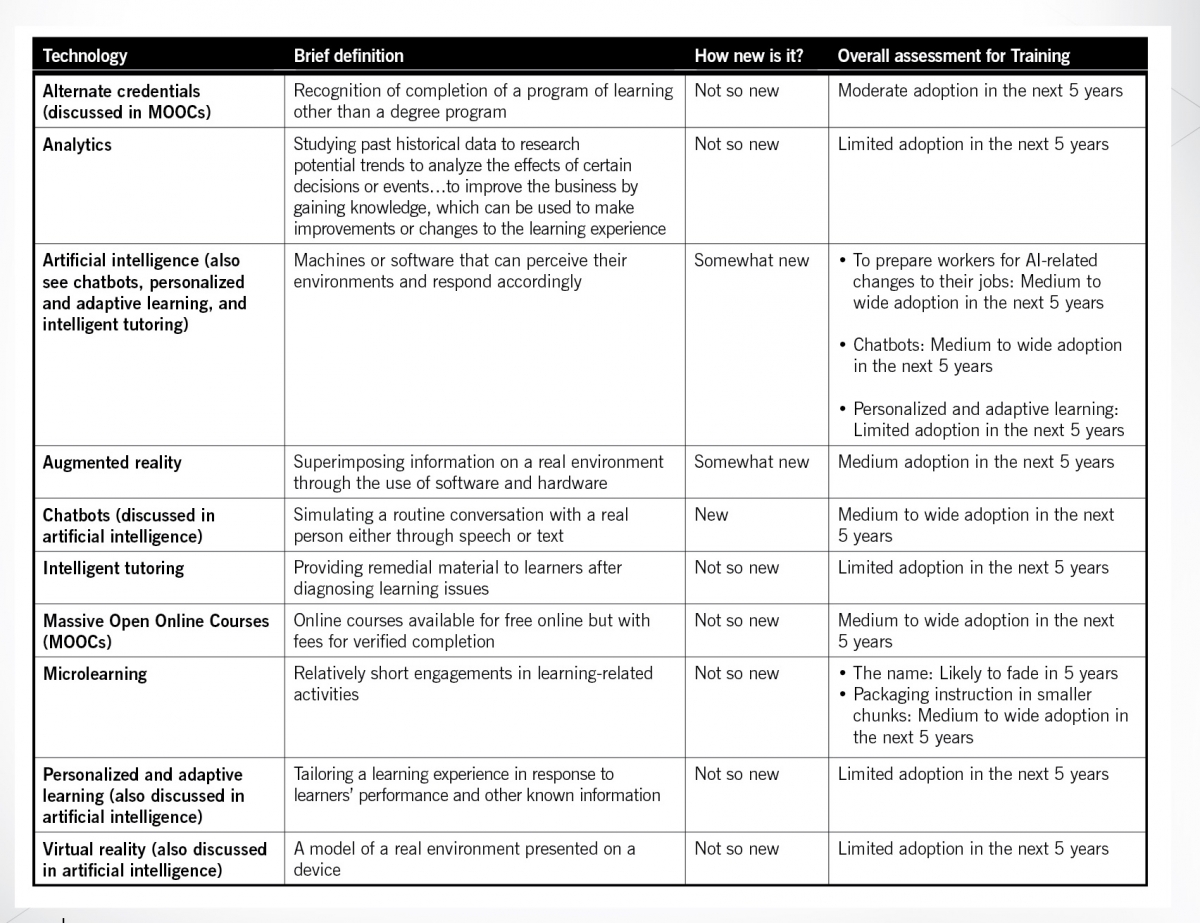

Table 1 summarizes our assessments of the technology (the table can also be downloaded at the end of this article).

ANALYTICS

According to the “Business Dictionary,” analytics refers to “studying past historical data to research potential trends, to analyze the effects of certain decisions or events…to improve the business by gaining knowledge that can be used to make improvements or changes.”

A specialized branch of analytics focuses on learning. It specifically focuses on assessing data about student characteristics, student performance in courses (including completions and performance on learning assessments), and student pathways through courses to strengthen the experience and its outcomes. The data is collected from existing systems, such as learning management systems (LMSs), and e-learning software; analyzed by specialized applications; and presented as reports, including dashboards, that present a visual snapshot of an organization’s operations.

At its best, because of the power of specialized applications, learning analytics can predict relationships that might not have been observed in other ways. For example, Guild Learning discovered that delays in processing textbook reimbursements affected the ability of Walmart employees to persist in online degree programs, so Walmart changed the reimbursement process.

“Newness” of the technology: Learning professionals have been collecting data on the characteristics and performance of learners for decades. For example, reports on learner characteristics and learning outcomes regularly appeared in Training and Training and Development (now TD) magazines in the 1970s and 1980s. Furthermore, the first dashboards appeared more than a decade ago, with ASTD’s (now ATD’s) Workplace Learning and Performance Scorecard.

But a few things have changed in the last decade: the rise of specialized learning analytics programs, such as Metrics That Matter; the increase in the amount of data collected about learners and their behaviors from e-learning software; the increased use of e-learning—meaning that analytics programs have more data about learners to analyze; the rise of services that let training groups compare their performance to that of other organizations; and the rise of new analysis frameworks for analyzing the data—focusing as much on the performance of the training group as on any individual course.

Example of use: One chief learning officer reported that they use analytics to benchmark their training organization against best-in-class, and to assess the overall performance of the organization at three levels: strategic (return on expectations, distribution of programs against strategic needs of the organization, and alignment with business drivers); operational (such as the support of managers and operational variables); and tactical (such as satisfaction rate).

Overall assessment: Limited adoption is anticipated in the next five years. Although LMSs and e-learning tools can collect extensive data about learners, analytics programs often need large data sets with thousands of learners to generate predictive analytics (that is, to find relationships in the data). However, few individual training courses have thousands of learners in courses, much less curricula. So the systems primarily are useful for preparing readable reports on current operations. Although services let training groups benchmark themselves against other training groups, users note concerns with the quality of the data from benchmarking participants, and that, in turn, raises concerns about the extent of benchmarking that is feasible. This is similar to an assessment made by the Joint Research Centre of the European Commission, which concluded, “Much of the current work on learning analytics concentrates on the supply side—the development of tools, data, models, and prototypes”—and “considerably less work on the demand side”—that is, how Learning professionals can use analytics. This, however, contrasts with the “2018 Horizon Report,” which focuses on adoption of technologies in higher education, not training and development.

ARTIFICIAL INTELLIGENCE

Artificial intelligence refers to machines or software that can perceive their environments and respond accordingly. For example, “smart” devices such as the Amazon Echo that can play a soundtrack, read out a day’s schedule, or turn on lights by voice command display some level of artificial intelligence. In a business context, The New York Timesreports that State Auto Insurance is using “robotic process automation” or “bots” to perform repetitive system tasks that “drive you crazy,” such as the time-consuming task of recording administrative information about insurance policies whose rates were not reviewed. In the research community, artificial intelligence encompasses a diverse set of interests, including natural language processing, neural networks, robotics, artificial perception (such as vision detectors), complexity theory, and cybernetics (which is also a foundation of educational technology).

In educational contexts, artificial intelligence is believed to have several possible applications. One is personalization, which involves tailoring an instructional program to the needs of a learner based on his or her responses to prior test questions and other known information about the learner. Another is tutoring, which involves a dialogue-like experience in which the computer diagnoses learning challenges and presents support tailored in response to the diagnosis.

“Newness” of the technology: As a field, artificial intelligence was named in the 1950s, but did not begin to yield general applications that affect ordinary users until the 1980s and early 1990s, with the rise of wizards that perform tasks for users (and now such an essential component of mobile apps that no one uses the term, “wizard,” any more) and decision support tools such as the ones that help a system automatically determine whether to increase credit limits.

Researchers have pursued applications in education most of that time, especially in regard to intelligent tutoring and personalization. Some of the first commercial products have emerged in the last decade, although they are almost exclusively aimed at primary, secondary, and higher education.

Although educators have focused on intelligent tutoring and personalization, professional communicators have focused on chatbots, which simulate a real conversation with a real person through speech (such as with Amazon’s Alexa or Apple’s Siri) or text in which a system rather than a person responds to the prompts. Chatbots rely on a knowledge base filled with responses to anticipated questions and requests. Although prototypes of chatbots were tested in the 1960s and 1970s, commercial chatbots began appearing in the last few years.

In addition to applications in education, futurists are concerned about the applications of artificial intelligence and other automation on jobs, with some predicting that AI and automation could replace as many as 40 percent of all jobs by 2030. Other experts have less dire warnings, but anticipate the nature of jobs could drastically change, much as computers drastically changed the way people performed work when they entered the workplace between the 1960s and 1990s. Preparing people for these drastically changed jobs could generate demand for training.

Example of use: Long-time e-learning expert Kevin Kruse describes his experiences of trying to build a chatbot to serve as a leadership coach in a recent issue of Forbesonline. Anticipated uses in 2018 focus on broader HR tasks, including fielding common questions and inquiries related to recruitment and benefits.

Overall assessment: Depends on the application:

- Demand for training to prepare workers for job-related changes resulting from AI: Medium to wide adoption in the next five years, as employers begin adopting AI. Deloitte reports investment in AI-related software and services is expected to double between 2016 and 2020, and workers will need training to handle reformulated responsibilities.

- Adoption of chatbots, either to support training operations or to provide performance support on the job: Medium to wide adoption in the next five years. In terms of support with enrollment and other training administration, this aligns with a general expectation of the adoption of chatbots for self-service Human Resources. In terms of performance support, this aligns with the general expectation of growth in chatbots for marketing and customer support in general.

- Adoption of personalized and adaptive learning and intelligent tutoring: Limited adoption in the next five years. On the one hand, strides are being made; they are occurring in subjects with vast numbers of students (such as mathematics-related subjects, which have millions of students each year) and more concrete subject matter (also favoring mathematics). That’s partly because of the technology itself: AI needs hundreds, if not thousands, of example materials to “train” the system. But this is also costly, thus, favoring courses taken by hundreds of thousands or millions of students in a given year, which few, if any, training programs have.

AUGMENTED AND VIRTUAL REALITY

Many Training professionals lump augmented and virtual reality together because they both contain the word, “reality,” and involve technology. But they are actually separate technologies:

- Augmented reality superimposes information over a user’s view of a real environment. In some cases, the information might be superimposed while the user wears or uses special equipment, such as scanning a menu in Japanese with the camera of a mobile device and seeing its English translation on the screen. In other cases, the information is superimposed on a real object, such as superimposing an image of a real dinosaur over the skeleton of one displayed in a museum.

- Virtual reality is a simulation of a real scenario on a special device, such as a flight simulator or Second Life, a virtual world that was popular in the late 2000s.

Both have applications in training. Augmented reality can provide learners in technical training with the names of parts in an engine as they scan the engine with their mobile phones (much like the menu example above). At the least, this provides more complete information to learners in its context than a line drawing in a manual or on a slide. At the most, some research conducted in schools and higher education suggests augmented reality can promote motivation to learn.

Virtual reality lets users practice skills in “safe” environments, especially important for people learning risky procedures such as handling a plane or train in distress and medical procedures.

“Newness” of the technology: Training and Development professionals have been aware of both technologies for quite some time. Augmented reality is the newer of the two, a term coined in 1990 at Boeing, but by 2012, enough work in augmented reality had been conducted that Tech Trends, an educational technology journal, could publish a summary of the literature in the field. Interest in augmented reality has grown substantially in the last five years as awareness of the technology grows, along with the tools for creating it. Once Training and Development professionals master the concept of superimposing information and the technology for doing so, it is probably the easier of the two to implement.

Virtual reality is much older, and trainers began discussing its applications in the 1970s, with interest substantially growing in the 1980s with the advent of personal computing. By the 1990s, flight simulators and medical simulators were increasingly common. Because virtual reality has the potential to contextualize material, trainers have sought to use it in other situations.

The challenge has always been cost. Part of the cost has been the technology, which has been forbiddingly costly to develop and use. Recent developments in technology have promised to reduce that cost, which explains the recent resurgence of interest.

Enthusiasts might overlook design costs, which technology often does not substantially lower. Virtual reality lets learners act in a model of a real environment, which requires anticipating each possible action learners could take and providing a response. This takes time. Bryan Chapman, who has studied the length of time needed to prepare an hour of completed instruction in a variety of formats, labels this type of work as Level 3 e-learning. He reports that this type of e-learning takes between 217 and 716 hours of effort for one finished hour of instruction, with an average of 490 hours. Some complex simulations can require more than 1,600 hours of effort for one finished hour of instruction. The average cost per hour of Level 3 e-learning is $50,371 per hour. By contrast, Level 1 e-learning—the slidecasts typical of much e-learning today—takes between 49 and 125 hours to develop, with an average of 79 hours of effort for one finished hour of instruction. The average cost is $10,054. Although improved technology can reduce some of the time and costs, simulation still requires substantially more time and effort than the typical e-learning course.

Example of use:

- For augmented reality: A review by the IEEE notes that one of the greatest advantages of augmented reality is the ability to annotate the environment. Examples include superimposing information over parts of a product as learners scan it, and superimposing location information on a smartphone app, so new workers quickly learn their way around a facility. Note that these applications have uses beyond training.

- For virtual reality: Some applications, such as aircraft simulators and fully functioning medical “dummies” that can respond to treatment, are well known. But other examples include situational simulations, Walmart using virtual reality to teach customer service, and NASA using virtual reality to prepare astronauts for space travel.

Overall assessment:

- Augmented reality: Moderate adoption within the next five years. Despite the hype and the extensive number of literature reviews on the subject, all were light on examples for training and development. Until Training and Development professionals can imagine how to apply this technology, they’re not likely to widely adopt it.

- Virtual reality: Limited to moderate adoption in the next five years. Despite improvements to the technology, cost and time remain barriers to adoption.

MASSIVE OPEN ONLINE COURSES (MOOCS) AND ALTERNATE CREDENTIALS

When MOOCs received widespread attention in 2012, educational technology advocates expected them to disrupt higher education. That’s because among the first MOOCs were computer science courses that were taught academically at Stanford. The instructors wanted to make the courses available to a much wider audience—especially learners in the developing world—by recording the lectures and making them and all other course activities available on the Internet. Virtual students would take the course online at the same time as Stanford students. The only difference was that the virtual students would not pay for the course. The “massive” in these courses also refers to participation levels: more than 150,000 students in some of the first classes.

Over time, the format of MOOCs transformed from 15-week academic courses to shorter four- to six-week courses. Courses included a combination of videos, activities, and online discussions, and most were designed to generate interaction between students because, by necessity, they allowed little to no direct interaction with an instructor. Instead, students interacted with a tutor or teaching assistant. As the courses became shorter, their focus shifted from replicating semester-long academic, for-credit courses to more job-focused courses aimed at working professionals. Today, most MOOC providers offer packages of courses students can take to earn a credential with names like micro- or nano-degree and that can be earned in considerably less time than a degree. These credentials are called alternative credentials because they offer an alternative to a college degree. Participants pay for these credentials; many courses remain free to study.

“Newness” of the technology: Open education, the concept underlying MOOCs and that focuses on making courses available at low or no cost to people who might not otherwise have access to them (including people without the means to attend higher education and working adults), first emerged in the 1960s. The Open University of the United Kingdom is the most famous of these nearly 65 open universities worldwide. Most of those universities are located in the developing world, the original market for MOOCs. Most use a distance education model, originally correspondence school and more recently e-learning, to reach learners and provide them with learning options that suit their busy schedules.

The failure of MOOCs to disrupt traditional higher education led to its shift in focus to working adults in the developed world, and fills a niche many Training groups have abandoned over the last few decades: providing long-term job development beyond the scope of an immediate job. The credentials—although not necessarily standard across providers—increasingly are recognized across employers. And employers increasingly are working with MOOC providers such as Udemy, EdX, and Coursera to make these courses available to workers, though most employers expect workers to take these courses on their own time.

Example of use: One of the most popular MOOCs right now is Google’s IT Support Professional Certificate available through Coursera, a platform for MOOCs, which anyone can take to meet the basic qualifications for this job. It is providing scholarships for 10,000 students. Google also offers career development and technology courses through Udacity, another platform for MOOCs. Georgia Tech also works with Udacity to offer one of the only online degrees available in this format, an Online Master’s of Science in Computer Science. AT&T makes this program available to its employees. Other companies have made libraries of content in MOOCs available to their worker,s and those who complete the courses can receive completion certificates.

Overall assessment:

- MOOCs: Medium to wide use expected in the next five years. Although MOOCs proved disappointing in their initially anticipated application as a replacement for higher education, they have a brighter future in training and development. Part of the reason has to do with practicalities. The first MOOCs had no reasonable revenue stream (they were supposed to be free), did not understand the politics of university credits (universities only recognize credits from outside their institutions under tightly controlled circumstances), nor the value of degrees (the most desired education credential, especially by those who lack them). By contrast, the training market does not require credits, is willing to pay (especially with prices that are significantly lower than comparable training and academic options and affordable to workers who might pay for MOOCs themselves), and the certificates and micro-credentials offered are sufficient for employment purposes. Plus, as noted earlier, MOOCs fill a void in the training market.

- Alternate credentials: Moderate adoption in the next five years. Although formal degrees will remain the preferred credential, the cost and time required for higher education will drive workers and employers to seek an alternative that provides both instruction and recognition for completing it. Although demand could grow, only employer recognition will ensure the success of these credentials because that makes earning these credentials worth the investment.

MICROLEARNING

According to independent training researcher Will Thalheimer, microlearning refers to “relatively short engagements in learning-related activities that may provide any combination of content presentation, review, practice, reflection, behavioral prompting, performance support, goal reminding, persuasive messaging, task assignments, social interaction, diagnosis, coaching, management interaction, or other learning-related methodologies.”

Microlearning has certain practical applications. Trainers dealing with complex material can promote transfer by using microlearning as a follow-up to the original training event. By contrast, retailers and similar customer service organizations can only offer training during brief (15-minute) slow periods, and microlearning meets the time constraints. Sales representatives and other busy workers can take microlearning during travel time between locations.

“Newness” of the technology: The term, “microlearning,” is new, emerging in the last five years, but the concepts on which it is based are not. Some attribute the concept of microlearning to spaced reinforcement, a concept introduced in the 1930s that helps learners retain a large body of knowledge by briefly reviewing pieces of the material afterward. It’s called “spaced” because the time between the brief reviews grows longer. Computers facilitated the process of automating these reviews.

Microlearning also emerges from the concept of chunking, which involves structuring material into short, cohesive sequences that have a tight focus and minimize the likelihood of overloading learners with too much information.

Other similar applications of these concepts have emerged over the years, such as electronic performance support (which provides brief instructional material on demand), and tips of the day that appear when starting an application (also like Microsoft’s dreaded Clippy).

Furthermore, designing training programs for use during brief breaks or when workers are traveling between locations is not new. That’s one of the reasons audiotapes (similar to podcasts, but using audio cassettes) were one of the most popular forms of training in the 1980s and 1990s, according to Training’sAnnual Industry Report.

Some microlearning advocates claim microlearning responds to new realities in the learning environment. Independent training researcher Patti Shank pushes back on this. For example, in response to the claim that microlearning “helps with modern learning because technologies have changed how we learn,” Shank responds: “Humans have the same cognitive architecture they’ve had for thousands of years.”

Similarly, in response to the claim that microlearning “works because it’s similar to how people find answers to their questions: online searches,” Shank responds: “Finding answers to quick questions and learning for application (deep learning) aren’t the same.” And in response to the claim that microlearning “is needed because people now have a smaller attention span,” Shank responds: “The research about a lowering of our attention span was completely made up… attention spans vary . . . and are likely to wander over time.”

In addition, Shank warns trainers that microlearning does not meet every learning need—just those that can be accomplished in short bursts, like remembering material from a longer course or building upon material from that course, or to support the development of skills on the job.

Example of use: One large employer has been using microlearning for years, but called them mailcasts. It provides bi-weekly reminders to managers about performing various personnel-related tasks. Managers receive the reminder by e-mail, and have a choice of viewing the reminder as a text note or a video.

The American College of Ophthalmology has leveraged its archive of cases to present brief teaching cases to its members, where they can brush up on diagnosis and treatment skills, and receive Continuing Education Units (CEUs) for doing so.

Language learning applications Duolingo and Babbel both represent applications of microlearning, even if they do not label themselves that way.

Overall assessment: The name, microlearning, is a fad and is likely to fade in the next five years. That said, packaging training in smaller chunks is likely to have medium impact in the next five years, even if people no longer call it microlearning.

Saul Carliner is Research director for Lakewood Media Group and a professor of Educational Technology at Concordia University in Montreal.