Some recent studies have revealed that much progress has been made in connecting learning and development to key impact measures and even occasionally measuring the return on investment. Essentially, there are seven trends, supported by surveys, polls, and practice, that highlight good news for learning and development accountability.

The Value Chain

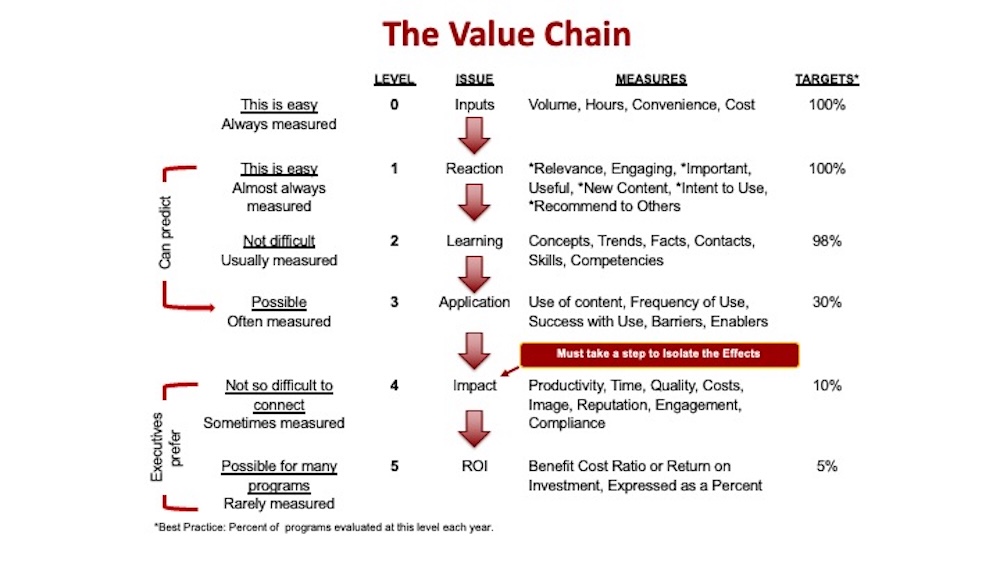

But first, let’s review the data sets that are possible from any learning program. The chart below shows the chain of value that is always present whenever any learning program is implemented. It’s a matter of deciding which levels to evaluate. The input into a learning program is Level 0, followed by five levels of outcomes, reaction, learning, application, impact, and ROI.

Over the years, we’ve been moving down this value chain. Measuring reaction and learning has been accomplished for almost a century. Near the last half of the last century, we’ve made much progress on Level 3, Application. Starting late last century, we moved into Level 4, Impact, and even Level 5, ROI. This value chain is based on a logic model that dates back to the 1800s. Raymond Katzell brought it to the learning and development field in the 1950s (Katzell). Many others have adopted the concept of these levels, including the Kirkpatricks, as well as our ROI Methodology. In 1983, we wrote the first book on training evaluation, highlighting these five levels of outcomes as possibilities (Phillips).

7 Trends for Learning Evaluation

- Impact is king. It’s easy to think about the chain of value and see that impact is an important data set. According to the value chain, application drives impact. Application and impact go together.

You make a difference in an organization when you have application and impact. Impact represents measures in the system tracked by organizations of all types. Whether it is a business, a government, a nongovernmental organization, or a nonprofit, there are impact measures with the major headings of output, quality, cost, and time. In a typical organization, there are usually hundreds of these measures already established. They usually are called key performance indicators (KPIs). These are measures that even participants in the learning program know and accept. In many cases, their own performance is reflected in those measures. They are easily identified and are very important to executives. In studies going back almost two decades, the impact was the #1 measure executives wanted to see (Phillips and Phillips). Impact is where we’ve made so much progress.

In a recent survey of 227 talent development professionals conducted by the Association for Talent Development (ATD), 43 percent of organizations reported that their business goals and learning goals are very aligned. This is encouraging news. In a benchmarking study of users of the ROI Methodology, 37 percent of programs are being evaluated at this level (ROI Institute). This study involved 246 organizations in their first five years of implementing the ROI Methodology. In a recent benchmarking study on leadership development sponsored by the World Bank, 17 percent of leadership programs were evaluated at that level (ROI Institute).

Participants have realized for many years that it is not just what you know, but what you do with what you know. Now they are beginning to realize that it is not just what you do, but the consequence of what you do. Application, without impact, is just being busy. Measuring impact is becoming a reality, as learning teams push the evaluation to impact. With impact, to be credible, it is necessary to sort out the effects of the learning program from other influences. This step is achievable and is one of the standards of the ROI Methodology.

- Progress is real. All these studies reveal that progress is being made. While these studies are encouraging for the evaluation of talent development at the impact and ROI levels, it has been a long effort. ATD has published 30-plus books on impact and ROI with more than a dozen case study books. Also, having results at these levels helps L&D teams win awards from Training magazine and ATD.

The ATD study shows that 40 percent of organizations have a full-time evaluator to evaluate the learning. Companies now are putting resources behind the measurement, and it is paying off. They are starting programs with the end in mind, and it’s paying off. They are presenting impact data to executives, and it’s paying off. The #1 reason for conducting impact and ROI evaluations is to protect or secure the budget. The #2 reason is to improve support for learning. The #3 reason is to make programs better (process improvement).

- ROI is driven by the C-suite. When implementing the ROI Methodology, one of the issues that is addressed in benchmarking ROI users is “who is driving the ROI implementation in the organization?” The latest benchmarking of best practice shows that 57 percent of ROI implementations are driven by the C-suite (ROI Institute). This is down from much more in earlier years. Evaluators and program owners now are driving almost half of the ROI implementations. This is good news.

In the ATD study, 46 percent indicate they are successful at comparing the costs and benefits of a learning program (ATD). This is amazing! While the driver for ROI is still the C-suite, more organizations are being proactive and getting ahead of the request.

The ROI shows if a program is worth it by comparing the benefits to the costs. Those who fund programs are those who want to see this level of value by asking, “Is it worth it?” Is it a cost or an investment? If it is an investment, they will support it. If learning is considered a cost—and not an investment—the budget usually will be reduced.

The return on the investment is needed for major projects, using an ROI formula from finance and accounting. A cost is easy to minimize, delete, reduce, diminish, pause, or freeze—you get the picture. The use of ROI is growing (has been and will be) because it is the ultimate accountability. It shows up every day in our lives as we make purchasing decisions ourselves. It should come as no surprise that people who fund the learning and development budgets would like to see the ROI of those very expensive and important programs, particularly those that may be difficult to see the value of, such as soft skills programs. That leads to the next trend.

- Soft skills are a target. Executives understand the value of job-related training to perform the job, or technical training, or investing in science, technology, engineering, and mathematics (STEM), or compliance training. These are easy to see as a necessity, and it is often easy to see the potential impacts. But when it comes to soft skills, such as culture, leadership development, communications, or empowerment, it is more difficult for them to see the connection. It’s not that they don’t support it, but they would like to see the connection. So those types of programs are a target for requests for soft skill evaluations.

This trend is underscored in the ROI Certification process. To date, there are approximately 10,000 individuals certified in the methodology, which means they’ve conducted an ROI study. Here’s the trend. Soft skills programs are the #1 target for ROI Certification Projects.

Each person participating in the certification must select a program to evaluate at impact and ROI levels. Out of an average of 20 participants per session, there will be three or four participants who select a leadership development project for evaluation, clearly making leadership development the #1 choice for their first ROI study. This is an amazing development. Some examples are located in our recent book, “Proving the Value of Leadership Development” (Phillips, Phillips, Ray, and Nicholas).

What we’ve noticed is that some of the most recent targets for ROI studies are culture change and change management. Although these are important (executives realize organizations can live and die by culture), the value of a culture change project is difficult for them to see unless data are presented along with the amount of impact related to the program.

- It’s easier than you think. In the midst of all the positive news, there is still the nagging issue that evaluation at the impact and ROI levels appears to be difficult and time-consuming. When people think about impact analysis and ROI analysis, they are thinking about mathematics, statistics, finance, and accounting. It conjures up images of numerous calculations, extensive analysis, formulas, and numerous complex tasks. That’s not the case. In reality, an ROI study that will meet the needs and be accepted by the chief financial officer can be developed with no more than fourth-grade math.

It’s a step-by-step process, and the options needed for each step have already been determined. It’s just a matter of selecting the right path to get there. Other team members should support you. Delivering results is not just something the evaluator does. While the evaluator collects data, reports the data, and makes recommendations, others must help design for the results and deliver the results. Many programs now are connected to the business, up front in the beginning. When programs are designed for impact, it is easier to see the impact at the end.

Program participants will join in on this issue, particularly those involved in soft skills programs. Start with the end in mind by having participants select one or two measures in their key performance indicators (KPIs) that they would like to improve, but only if they can improve them using these competencies in the program. This can make a huge difference. The participant in the program now has ownership of those measures, and they also have ownership of the process to improve them. They want to see it through and see the impact results. They will help to collect and report the data because it is their improvement they are concerned about. Impact and ROI analysis is not that difficult, when others help. More information on this methodology is available in ATD’s Book, “The Business Case for Learning.”

- A culture of accountability helps. In our benchmarking project for leadership development, one of the five skills that will be important in the next two years is creating a culture of accountability. When employees know their performance measures and accept these measures, it makes the process work much better. A culture of accountability ensures that employees understand their performance is not just what they do; it’s the impact they deliver that’s important. These are often KPIs in performance management systems. When these are in place, it is easy for them to see the connections to impacts. They want the programs they are involved in to connect to these measures and improve them. They usually will accept a culture of accountability when they are not being asked to think about measures beyond their control or access. It’s their performance that they are delivering. They will help you with this issue.

A major culture firm, Culture Partners, realizes this concept in its work. It starts with the end in mind with very clear impact measures it wants to change by changing the culture. Those measures secure the focus of the culture change journey. It clearly shows the why of the program. The impact is the why, and participants involved in programs want to know why. Having this concept of a culture of accountability will help get more participants involved in developing impact.

- Resistance is breaking down. There has been some resistance to evaluating at this level for many reasons. For the executives who fund and support learning programs, there is no resistance to push measurement to impact and ROI levels. That’s what they want. The resistance typically is on your own team. They resist it because it’s a change, it’s additional effort, and they have a fear of the outcome. If the program doesn’t deliver the value, does it reflect on the current team and the current programs? Will those programs be discontinued? The answer is usually, “No.” When you’re proactive and take the evaluation, if the evaluation shows it is not working, you’ll know why it’s not working as part of that evaluation. This provides you with data you can use to make changes going forward. When you are proactive, you are not punished because it’s not working. You are correcting a problem that was already there. In reality, it’s not the content of the learning that is the problem. It is usually the lack of support for the use of the content. It’s something else getting in the way.

Resistance is breaking down, even with the suppliers of programs. We recently completed two leadership program evaluations that were funded by the external leadership development provider. They initiated the issue, suggested their program should be evaluated, and paid for it. Supplier resistance is mostly in the past. More and more, they are stepping up to deliver the value and show the value.

It’s Up to You

There you have it, the seven trends for increased evaluation at the impact level. You can make a difference, and you can get there. As Dr. Patti P. Phillips, CEO, ROI Institute, says: “When it comes to delivering results:

Hope is not a strategy.

Luck is not a factor.

Doing nothing is not an option.

Things have changed in terms of the accountability of our expenditures. Change is inevitable. Progress is optional.”

Learning is a precious resource. In the past, so much of it was wasted because the learning was not used when we wanted it to be used. We are getting much better with this issue, and we are making much progress. But more progress is needed. There is still resistance and some who just don’t know how to do it. The path is in front of us. You can get there, and you can do it. We have been pleased to be an important part of this important movement for the past 30 years. We are ready to help anyone with this challenge.

For more information, see the most recent edition of our principal resource, Return on Investment in Training and Performance Improvement Programs (Phillips, Phillips, and Toes).

References

Katzell, R. A. (1952). Can we evaluate training? A summary of a one-day conference for training managers. A publication of the Industrial Management Institute, University of Wisconsin, April 1952.

Phillips, J.J. (1983). Handbook of Training Evaluation and Measurement Methods, 1st Edition. Houston, TX: Gulf Publishing.

Phillips, J. J. and Phillips, P.P. (2009). Measuring for Success: What CEOs Really Think About Learning Investments. Alexandria, VA: ASTD Press.

Association for Talent Development. (April 2025). “The Future of Evaluation Learning and Measuring Impact: Improving Skills and Addressing Challenges,” Alexandria, VA: ATD Press.

ROI Institute. 2020 Benchmarking Study. Accessed at: https://roiinstitute.net/2019-roi-institute-benchmarking-report/

ROI Institute. (2025). World Bank Leadership Development Benchmarking Study. For more information on this case study, please contact info@roiinsitute.net.

ROI Institute. 2020 Benchmarking Study. Accessed at: https://roiinstitute.net/2019-roi-institute-benchmarking-report/

Association for Talent Development. (April 2025). “The Future of Evaluation Learning and Measuring Impact: Improving Skills and Addressing Challenges,” Alexandria, VA: ATD Press.

Phillips, J. J., Phillips, P. P., Ray, R. R., and Nicholas, H. (2023). Proving the Value of Leadership Development: Case Studies from Top Leadership Development Programs. Birmingham, AL: ROI Institute.

Phillips, P. P., and Phillips, J. J. (2017). The Business Case for Learning: Using Design Thinking to Deliver Business Results and Increase the Investment in Talent Development. Westchester, PA: HRDQ and Alexandria, VA: ATD Press.

Culture Partners. (2025). Accessed at: https://culturepartners.com/

Phillips, P. P., Phillips, J. J., and Toes, K. (2024). Return on Investment in Training and Performance Improvement Programs. London, UK: Routledge.