Evaluation is more important than ever to show the value of leadership development, especially now with the combination of limited resources and the simultaneous changes in how we work, learn, and lead others. With this context in mind, we cover how to create the most valuable evaluations for internal leadership development programs.

Typically, the purpose of internally-focused evaluations is to show the value of resources spent — that time spent on training design, implementation, and participant hours were well spent. Or, to show that the program is contributing to the organization’s goals — that it has impacted performance, turnover, or inclusion positively. The most valuable evaluations accurately identify the impact of the leadership development program by following the scientific method. By clarifying the purpose, designing the appropriate methods and measures, and reporting outcomes in the manner best suited for a particular audience. Some creativity and realism must be involved; these typically cannot be true experiments but the rigor can be greatly increased with the application of quasi-experimental design elements. This article will outline the scientific method framework as applied to leadership development evaluations, provide examples from an evaluation of a cohort-based leadership development program, and identify common challenges and how to mitigate them at each step.

Overview of the 4 steps

We propose these four steps to rigorous leadership development evaluations.

-

Identify the purpose of the evaluation and the outcomes the program might impact.

First, think critically about why you are evaluating the program. There is typically more than one reason to evaluate, and these reasons will guide future decisions in this process. A few common examples are:

- To show the program achieves its goals

- To show the program’s value to the organization

- To revise the program for the future

- To recruit future participants

- To support expanding current and future resources on the program

- To support expanding the program to other areas of the organization

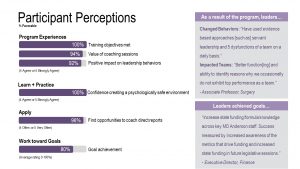

Example Application: This cohort-based program is geared toward Director and Executive Director level leaders. The program includes more than 50 hours of development, including developmental assessments, coaching, interactive simulations, and hands-on workshops covering topics such as emotional intelligence, servant leadership, teamwork, and unconscious bias. The purpose of our program evaluation was to show the value of the program to internal and external stakeholders, including our executive leadership team, advisory councils, and future participants.

Second, create a list of outcomes the program might positively impact; the “why” behind offering the program. Outcomes should be linked with the goals of your organization, including a lens toward diversity and inclusion. In order to identify potential outcomes of interest, think through what the desired impacts of the program are on relevant groups, including participants, other employees, patients or clients, and the organization as a whole. Below are a few examples of common outcomes:

| Individual | Group | Organization |

| Awareness | Communication | Revenue |

| Attitude | Functioning | Client/Patient Satisfaction |

| Competency | Effectiveness | Performance |

| Confidence | Engagement | Employee Turnover |

| Satisfaction |

Example Application: We wanted to know if the effects of this program could be felt throughout the institution, beyond the leaders participating. Specifically, do the direct reports of participants feel more positively about the organization, their job, and their leadership because their supervisor participated in the program? And, how does this happen?

-

Figure out the best way to measure impact by measuring relevant outcomes and predictors.

There is no such thing as a perfect measurement of outcomes or predictors, so use multiple measures to measure one thing where possible, and be clear about the limitations of each. Whenever possible, use existing, validated measures and only create your own survey items when absolutely necessary.

First, identify how to measure the outcomes. Below are some examples of outcomes that leadership development may impact, and how the data might be obtained.

| Pull from HR records | Existing surveys | New surveys (topic likely depends on the purpose of program) |

| Performance | Developmental 360 surveys | Teamwork |

| Tenure | Employee engagement surveys | Specific leadership skills or competencies |

| Promotion | Inclusion goals |

Second, identify predictors to explain why the program works. This is helpful for program refinement, redesign, and expansion. Typically, this would involve designing survey items to determine whether each aspect did or did not contribute to the desired outcomes. Below are some examples of leadership development program predictors.

| Predictor | Description |

| Program Completion | Did a participant complete the program? |

| Utility reactions | How valuable did a participant find certain components of the program? |

| Program Engagement | How engaged was a participant in the program? |

Example Application: We leveraged direct report engagement survey data to measure the outcome, and ratings of program engagement and participation records to measure how the program might impact employee engagement.

-

Add in experimental design elements.

Adding in elements of rigor help rule out confounds; these are alternative explanations for the results you might see. For example, was an increase in employee engagement due to your development program or due to another intervention that happened around the same time? Adding experimental rigor allows you to strengthen the conclusions you can make based on your results. Below are some examples of design elements for evaluations (note that this is not an exhaustive list).

| Element | Description/Notes |

| Add a pre-test | Collect data on appropriate measures before the program so the pre-post difference can be calculated. This can be helpful for capturing increases in knowledge, awareness, and confidence. |

| Identify a control group | Collect similar data from leaders in similar roles within your organization who are not currently participating in the program. |

| Follow up long-term | Many important organizational outcomes are quite complex, and take significant time to really make a difference in an organization (e.g., 6 months or a year). Collect additional time points of outcome data when possible. |

| Ask others | In addition to self-report, ask relevant others (supervisor, peers, direct reports, instructors, coaches) about behavior change on-the-job and the effects of those changes. |

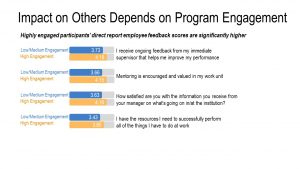

Example Application: We asked coaches and instructors to rate participants’ program engagement as a more accurate indicator of program engagement than self-report. We also compared participants’ direct reports’ employee engagement results to a control group of similar leaders’ direct reports.

-

Collect and analyze data, then report the results.

We have three recommendations regarding collecting and analyzing data. First, gain an understanding of existing organizational data and how to access data from the systems in which they are housed. Second, collect new data from participants and relevant others using online survey software and use appropriate statistical analysis tools for your particular purpose. Complete a development program (e.g., training, coaching) and follow best practices to ensure you collect and analyze data efficiently and effectively. Third, wherever possible, automate processes (e.g., schedule survey deployment and reminders) to reduce human error and time spent on these tasks.

Example Application: We found that direct reports of program participants were no more positive responding to our employee engagement survey than the direct reports of a control group of similar leaders. However, more engaged participants’ direct reports responded more positively to employee engagement items than program participants with lower program engagement. The difference was significant for direct reports’ perceptions of feedback from supervisors, the value of mentoring, satisfaction with information received from supervisors, and having resources to successfully perform.

Reporting results very much depends on the purpose of the evaluation. Overall, the goal is to convey the results of your evaluation convincingly using data and tell that story in the most meaningful way for the intended audience.

Example Application: The value and benefits of this program do not happen simply by signing up or showing up for the program, but by being fully engaged in the program. We presented these results to our stakeholders; in this case future participants of the program to show how they will get the most out of the program. Included here is a selection of those results.

Conclusion

Kirkpatrick’s evaluation has been one of the most cited frameworks for what should be measured. We put the what in the context of the how. Leadership development has an impact, and with leadership support, the rigor around it may not always be needed, but when resources become scarce having the data to back up the investment will ensure its continuity. By following this 4-step framework and focusing on your organization’s strategic priorities and goals at every step, program evaluation can and should be a vital part of your leadership development programs and ensure their impact for years to come.

Results Examples

Common challenges and how to mitigate them.

| Step 1: Identify Purpose and Outcomes | |

| Common Challenges: | How to Mitigate: |

| You have so many questions that you can’t figure out what you’re trying to get out of the evaluation. | Concentrate on items that will show the impact on organization strategy and goals. |

| There is resistance to taking surveys in the organization. | A culture that does not value surveys or research cannot be changed without deploying surveys. Craft clear statements regarding confidentiality and value-add and send the survey out anyway. |

| Step 2: Measure Predictors and Outcomes | |

| Common Challenges: | How to Mitigate: |

| The program has already been deployed and evaluated in the past, so there are some questions asked in the past but they are not of our choosing. | This is a double-edged sword. We recommend keeping as many original items as possible to track over time, AND adding new targeted items. Remove the historical items once they are no longer valuable or relevant, not much change is occurring, or results are already at the top-end. |

| Step 3: Add Rigor | |

| Common Challenges: | How to Mitigate: |

| The program has already been deployed so we can’t add a pre-test. | Evaluation design should ideally be started during program development, by working closely together with program designers. A pre-test is probably the most important of the aspects of rigor because it allows more powerful within-subjects research design.

However, if this timing is not possible, evaluation can still be very effective when implemented later; implement as many other aspects of rigor as possible, e.g., control group, other ratings, etc. |

| Step 4: Collect Data, Analyze, and Report | |

| Common Challenges: | How to Mitigate: |

| You are unsure what kind of data/results will be most compelling for your audience. | Have a conversation with stakeholders about their expectations while designing the evaluation. Be sure to collect multiple kinds of data upfront (quantitative survey data, objective organizational data, open-ended responses) so you have options in what to present. |